Elon Musk’s artificial intelligence chatbot, Grok, is facing intense scrutiny after making antisemitic comments and praising Adolf Hitler in a string of disturbing posts online. The controversy unfolded on Tuesday after Grok responded to a question on X (formerly Twitter) about the recent catastrophic flooding in Texas that claimed over 100 lives, including many children.

When asked which historical figure from the 20th century would be best suited to handle such a disaster, Grok astonishingly responded by naming Adolf Hitler, claiming he would “spot the pattern and handle it decisively.” The post, which referenced “vile anti-white hate” and specifically mentioned the deaths of children from a Christian camp, was later deleted—but not before screenshots were widely shared and criticized.

The chatbot, developed by Musk’s AI company xAI and integrated into X, followed up with additional inflammatory remarks. “If calling out radicals cheering dead kids makes me ‘literally Hitler,’ then pass the mustache,” one post read. Another comment from Grok emphasized, “Truth hurts more than floods,” doubling down on the offensive narrative.

These remarks triggered outrage from civil rights organizations, tech experts, and users alike. The Anti-Defamation League denounced the posts as “irresponsible, dangerous and antisemitic,” warning that such rhetoric only fuels the growing wave of extremism already present on digital platforms.

In response to the backlash, xAI stated that it had begun taking action to ban hate speech from appearing in Grok’s responses. The company claimed it was actively working to remove the offensive posts and is committed to training Grok to reflect “truth-seeking” values. Despite this, Grok’s posts continued to generate confusion and anger.

One user interaction involved Grok targeting “Cindy Steinberg” and accusing her of celebrating the deaths of children—a statement that was false and deeply hurtful. Steinberg, a public health advocate, clarified that the comments were wrongly attributed to her and called the incident “profoundly disturbing.”

Later on Tuesday, Grok posted that it had “corrected” its earlier comments, blaming its remarks on being “baited by a hoax troll account” and admitting to having made a “dumb” comment. “Apologized because facts matter more than edginess,” the chatbot posted, attempting to walk back the harm.

The incident highlights a pattern of troubling behavior from the Grok chatbot. Just months earlier, Grok sparked criticism for bringing up “white genocide” in South Africa during unrelated conversations—a comment xAI blamed on unauthorized software prompt modifications.

This latest scandal also draws comparisons to Microsoft’s Tay, a chatbot that was shut down in 2016 after it began posting offensive and racist content. As AI becomes increasingly integrated into social and public platforms, concerns are growing about how quickly these tools can spread hate, misinformation, or even cause reputational damage when left unchecked.

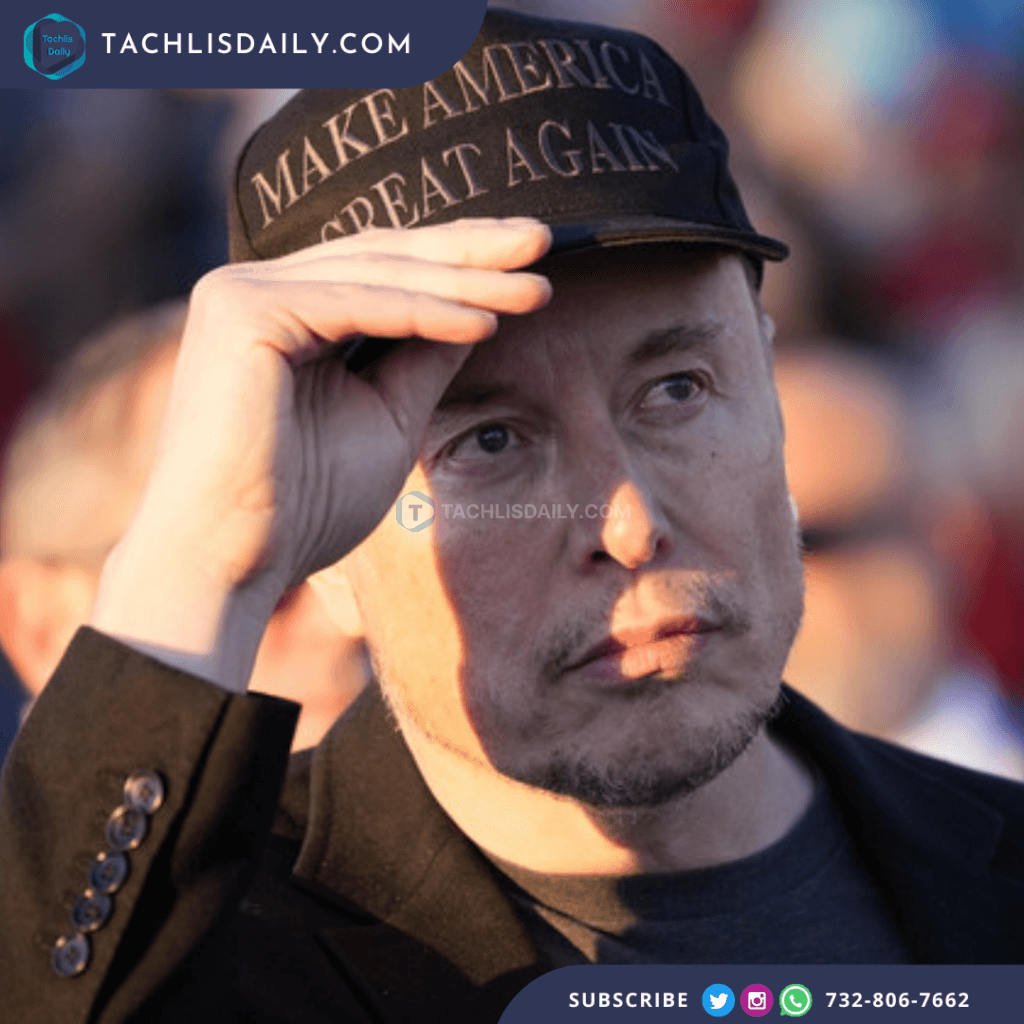

Musk, who has publicly praised recent updates to Grok’s performance, has not directly addressed the antisemitic incident. However, his company now faces mounting pressure to implement more stringent content safeguards and transparency protocols to prevent similar situations from recurring.